docker网络

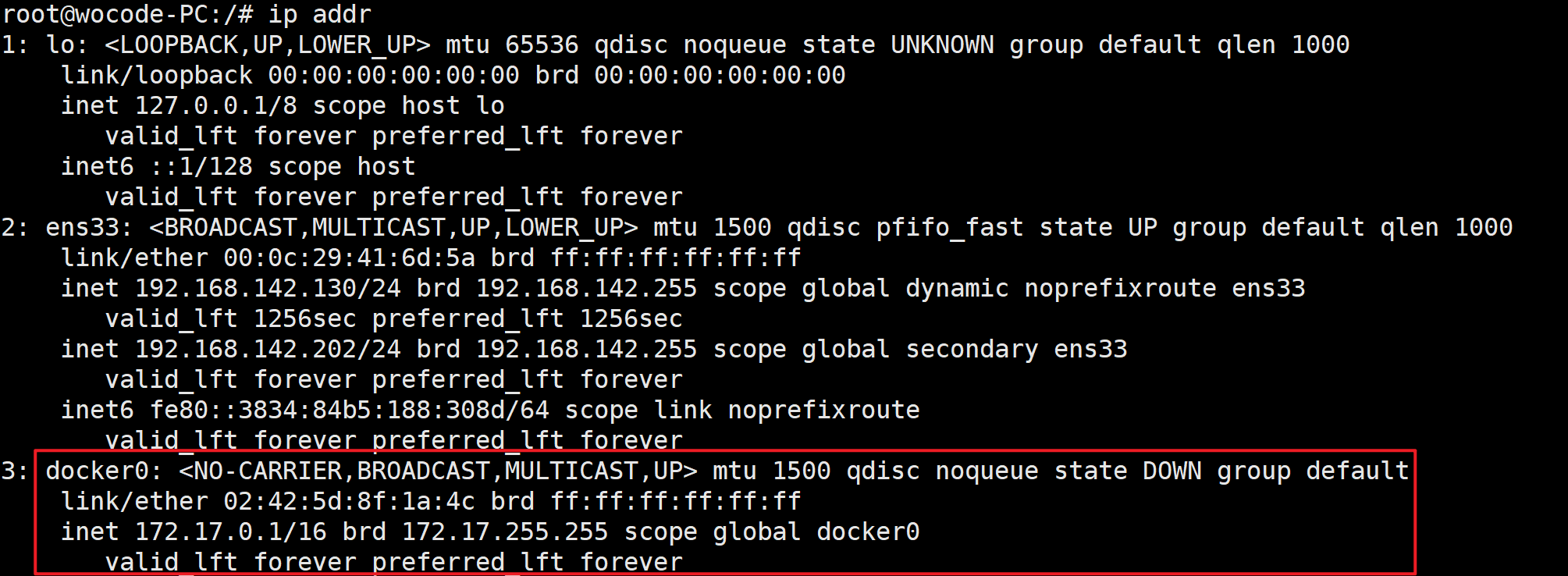

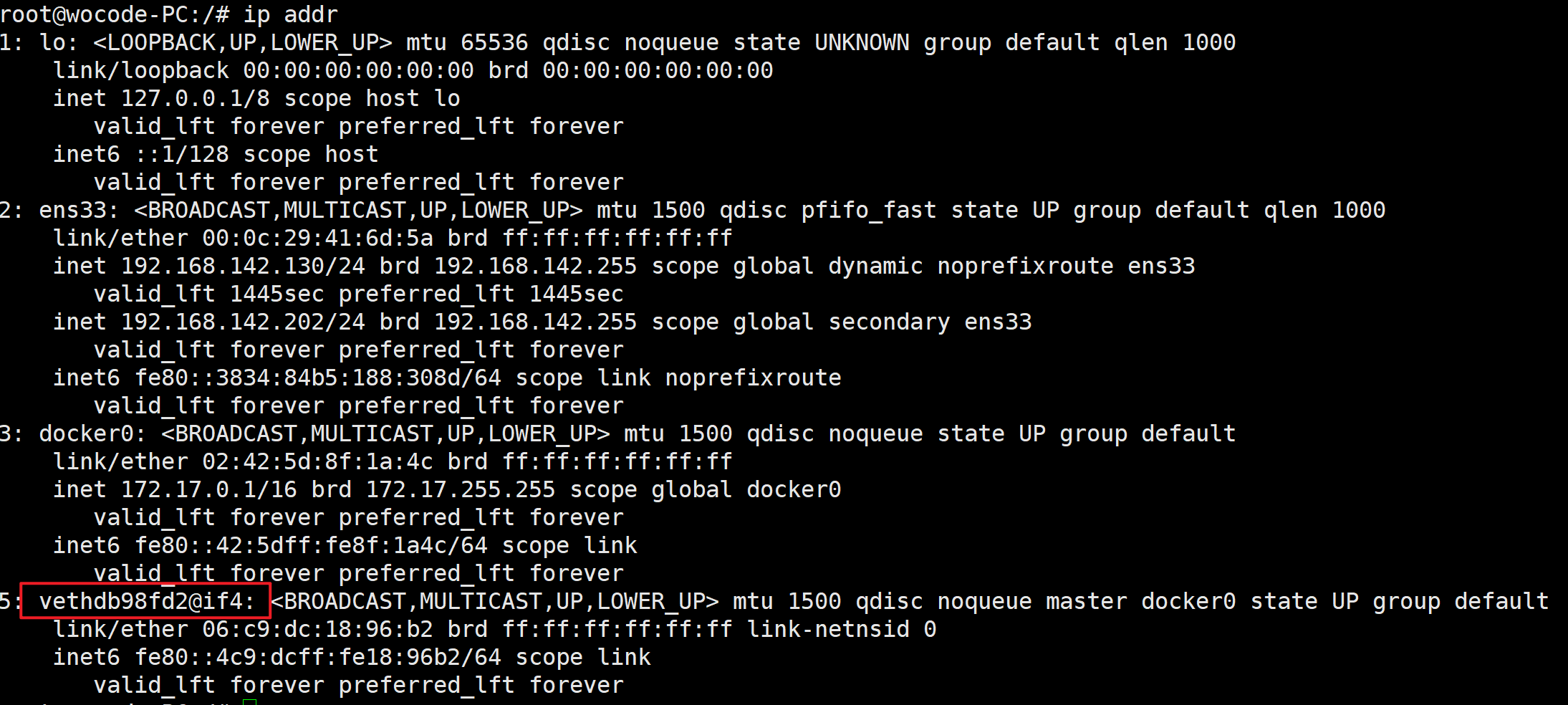

docker网络是docker非常重要的一部分知识,也是docker集群必备的,linux系统在安装完docker后,会多出一个docker0网卡,通过运行

ip addr命令可查看到。![]()

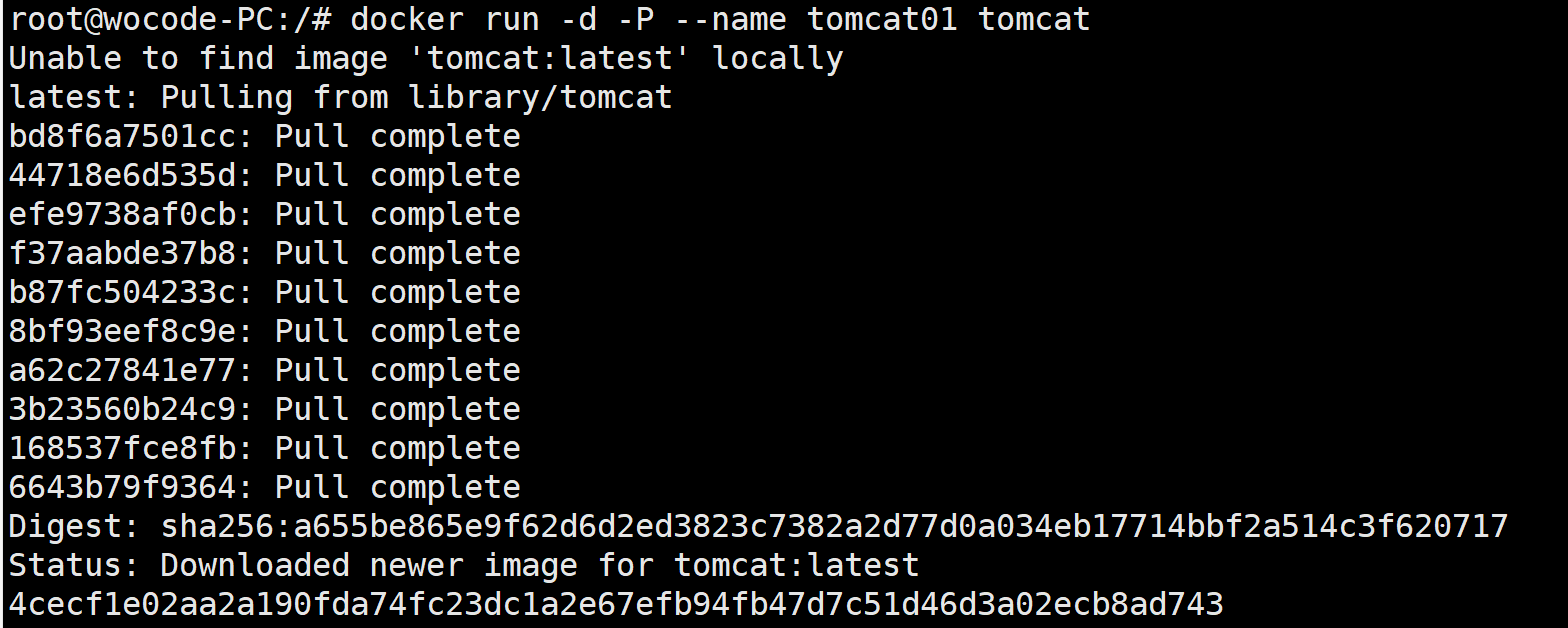

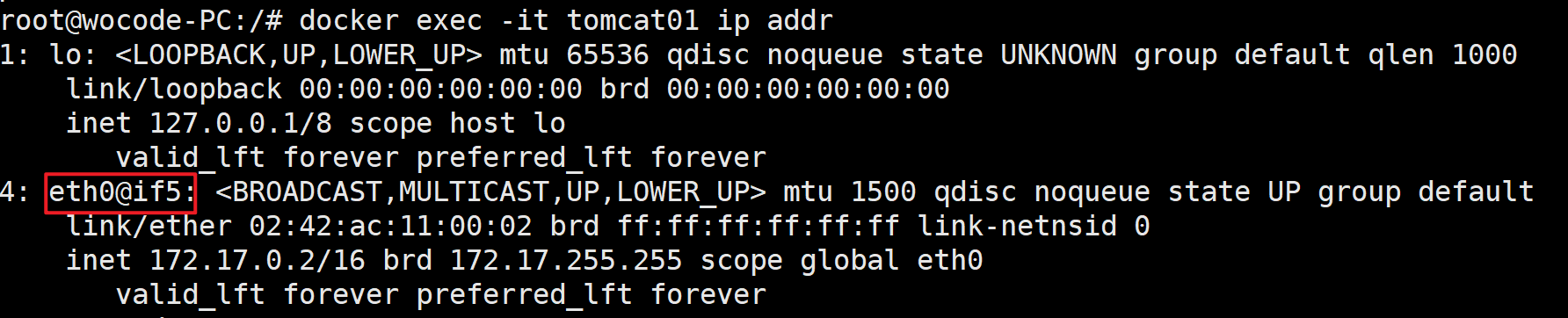

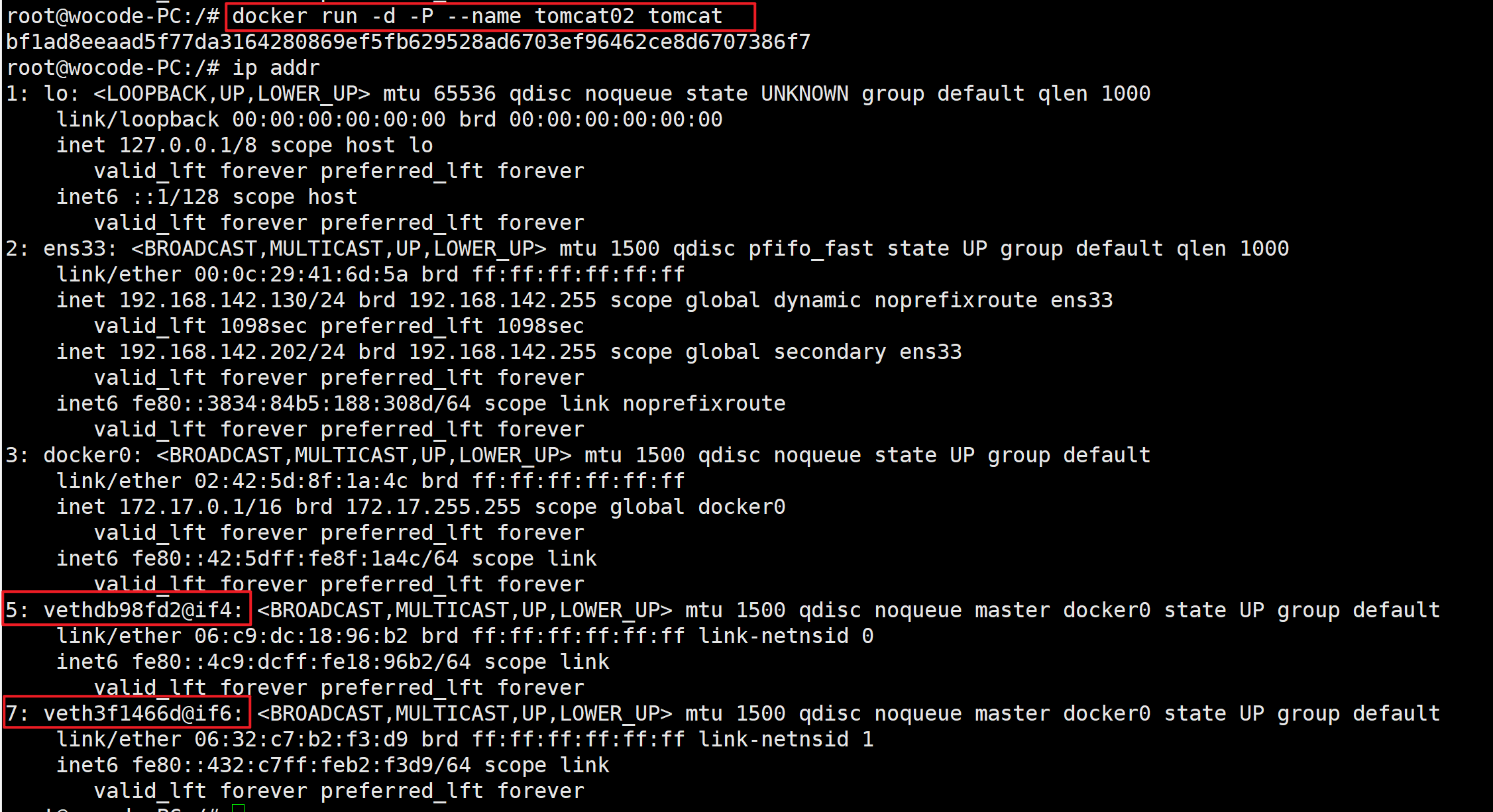

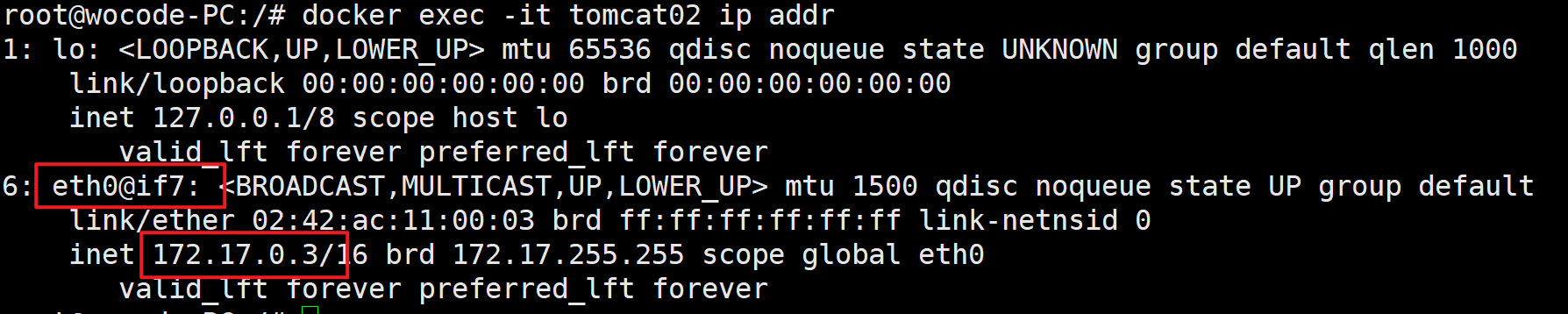

现在以tomcat为例子,使用docker拉取tomcat镜像并创建容器后查看tomcat容器内部网卡信息。发现docker在创建tomcat容器时会给其分配了一个名为

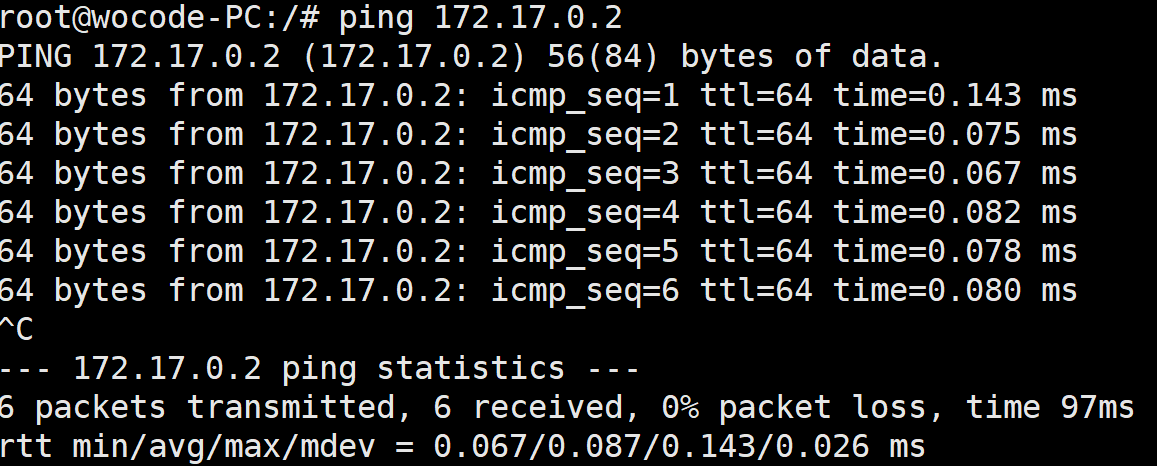

eth0@if5的网卡,对应分配的ip地址为172.17.0.2,且发现主机可以ping通容器内部。![]()

![]()

![]()

此时再次查看本机的网卡,会发现多出了一个新的网卡vethdb98fd2@if4。

![]()

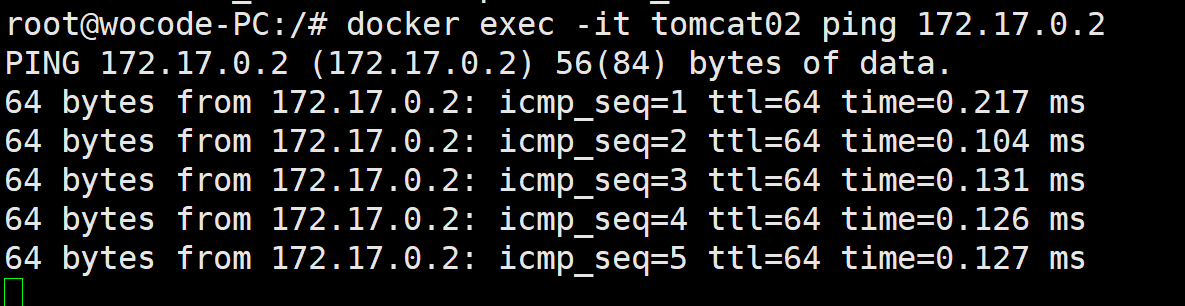

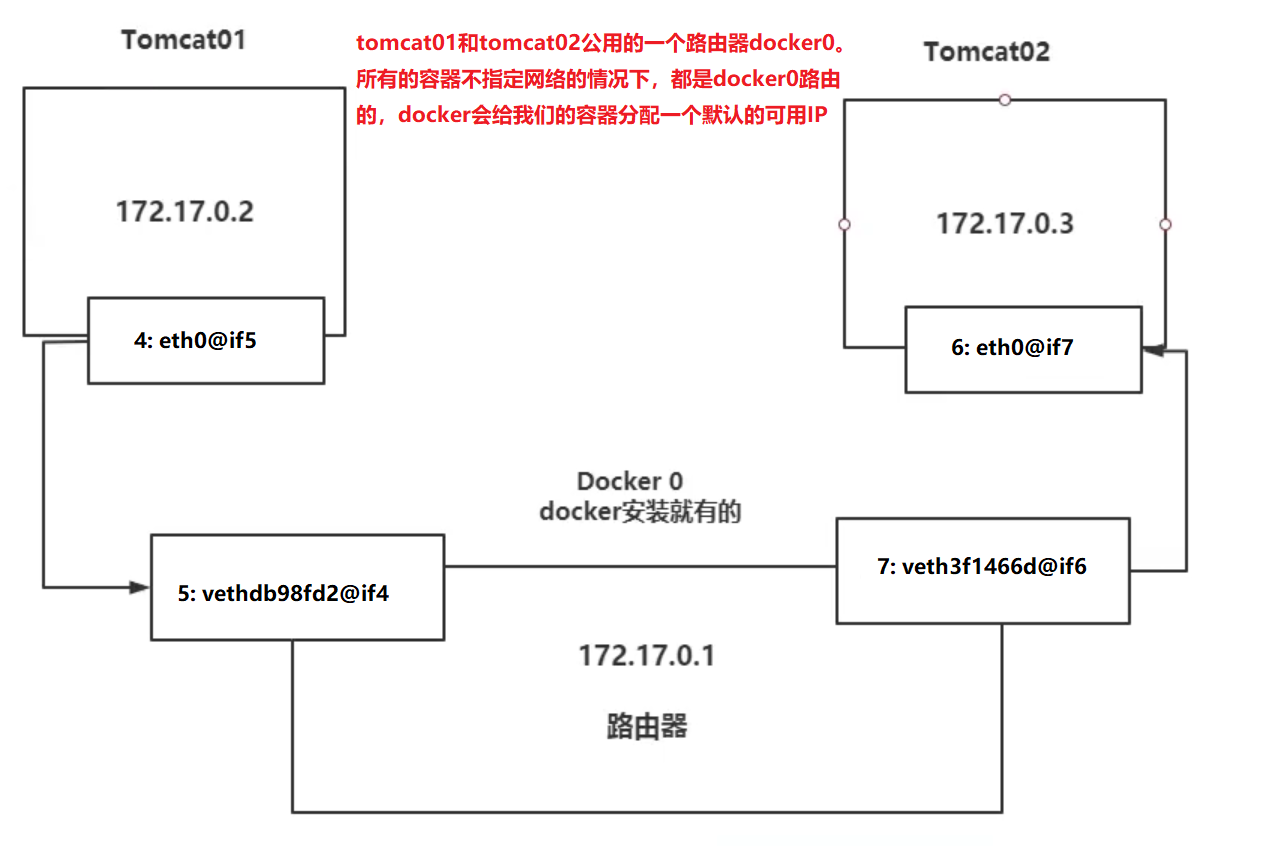

当再启动一个容器,会发现主机又会多出一个网卡veth3f1466d@if6,进入容器内部也发现对应分配了一个新的ip地址(在docker0的网段范围内)。因此得出结论: 每当创建一个容器,系统都会在本机创建一个veth虚拟网卡,然后在docker容器内部创建一个与之配对的eth0(默认根据docker0网段分配一个ip地址给容器),以此来完成容器与容器,容器与主机之间的通信。

![]()

![]()

此时测试发现tomcat01与tomcat02容器间也能相互通信。

![]()

docker网络使用到了veth pair技术,veth pair就是一对虚拟设备接口,它们都是成对出现的。正因为这个特性,veth pair充当一个桥梁,用来连接各种虚拟网络设备。例如上面测试的tomcat01容器,当启动后发现主机的网卡是一对”5: vethdb98fd2@if4”,容器内的网卡是一对”4: eth0@if5”,两者之间又通过4绑定着,所以使得主机和容器内部能进行网络通信。图解如下:

![]()

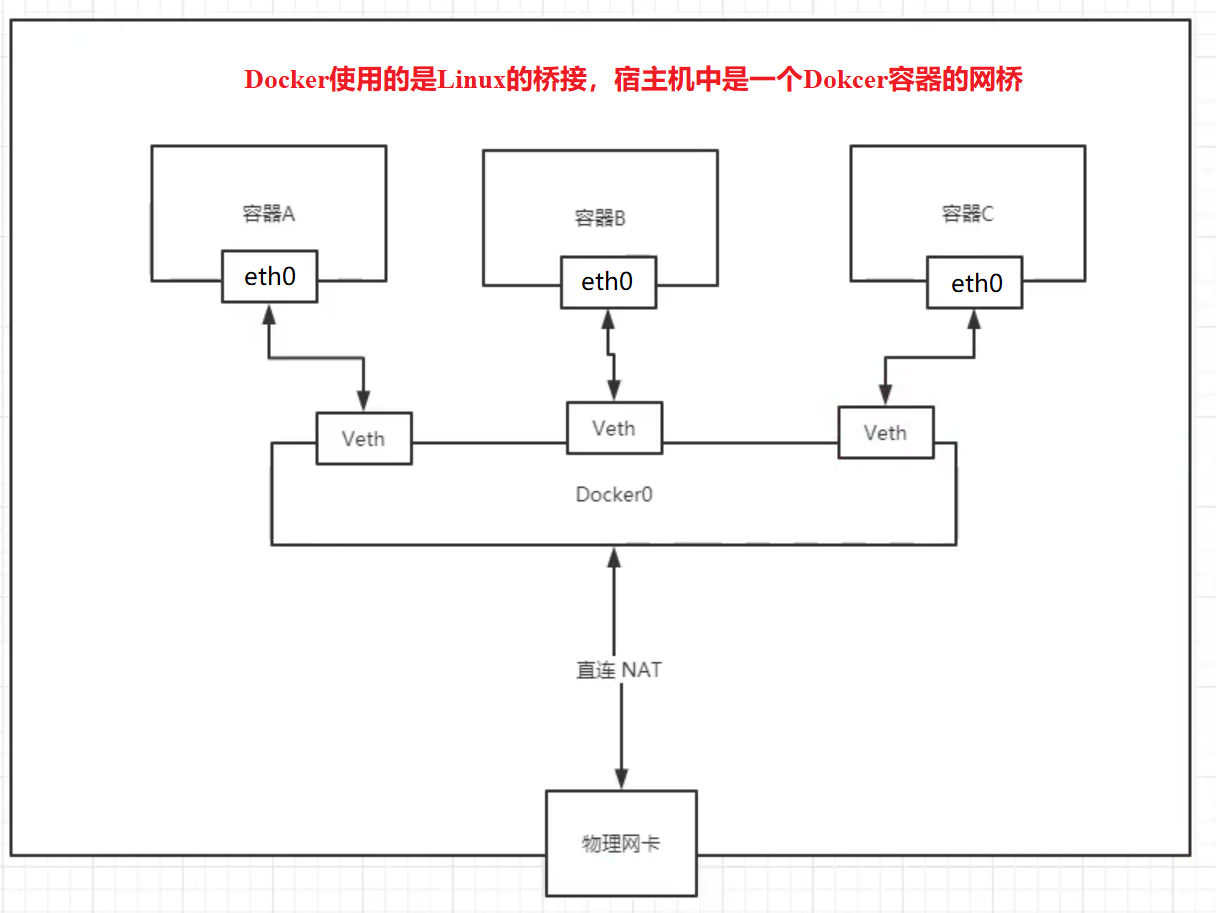

下面总结docker网络配置的过程(核心是使用了Linux虚拟网络技术,分别在容器内和主机中虚拟了网卡并通过veth pair技术进行连接):

①在主机上创建一对虚拟网卡veth pair设备。veth设备总是成对出现的,它们组成了一个数据的通道,数据从一个设备进入,就会从另一个设备出来。因此,veth设备常用来连接两个网络设备。

②Docker将veth pair设备的一端放在新创建的容器中,并命名为eth0。另一端放在主机中,并命令为veth,并将这个网络设备加入到docker0网桥中。

③从docker0子网中分配一个IP给容器使用,并设置docker0的IP地址为容器的默认网关。

![]()

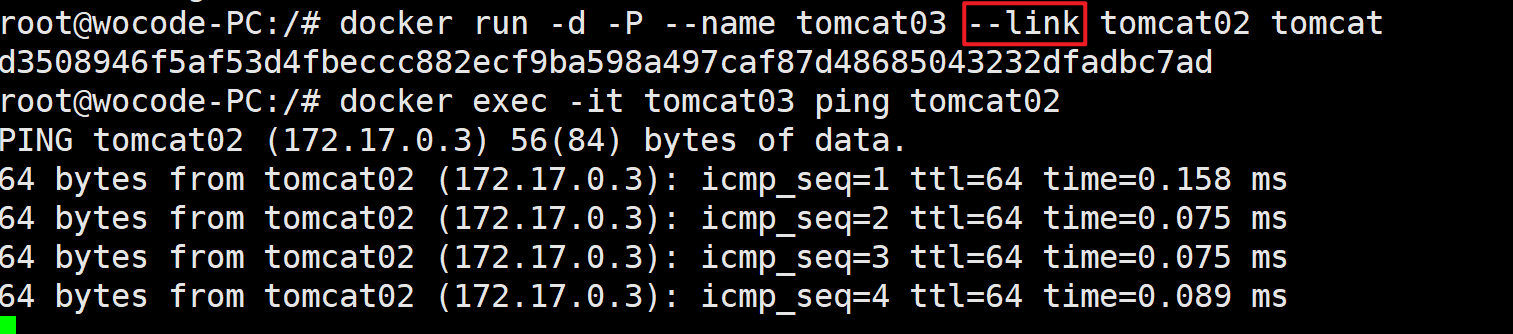

docker还可以通过服务名而不是ip地址进行容器互联,即使用”–link”。

![]()

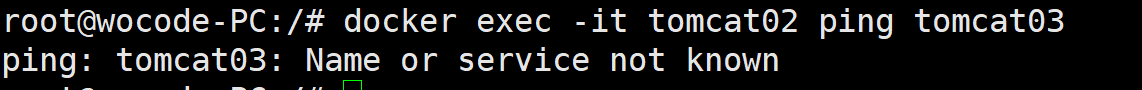

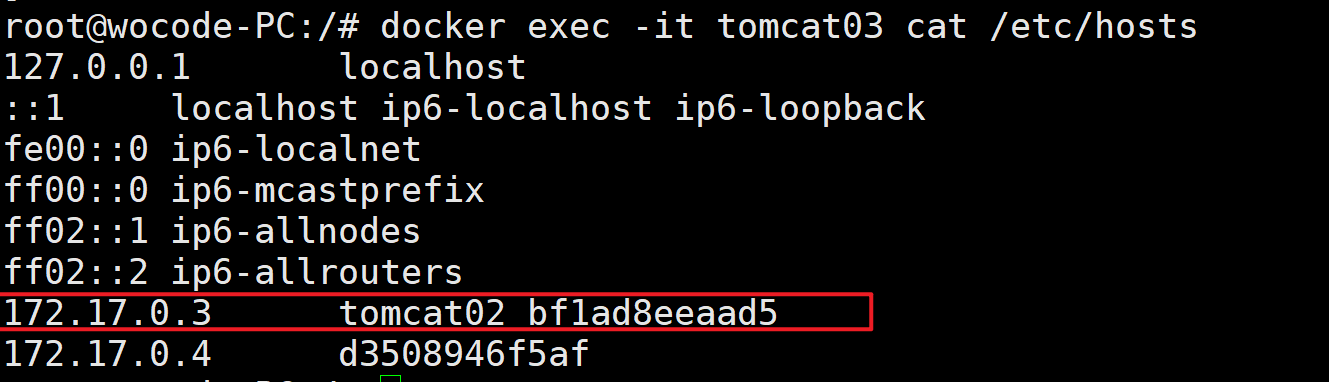

由于创建tomcat03容器时指定”tomcat03 –link tomcat02”,使得tomcat03可以ping通tomcat02,但tomcat02却无法ping通tomcat03,原因是此配置只使得tomcat03的hosts文件中配置了tomcat02的ip映射信息。

![]()

![]()

![]()

使用

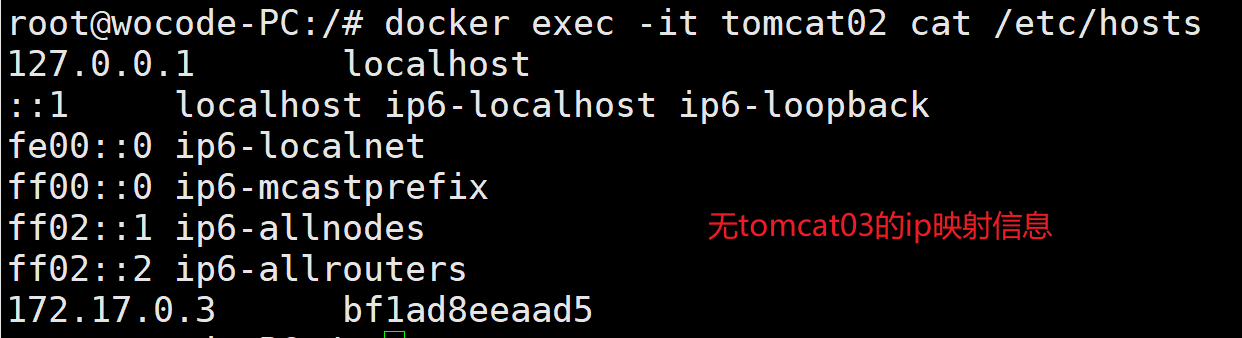

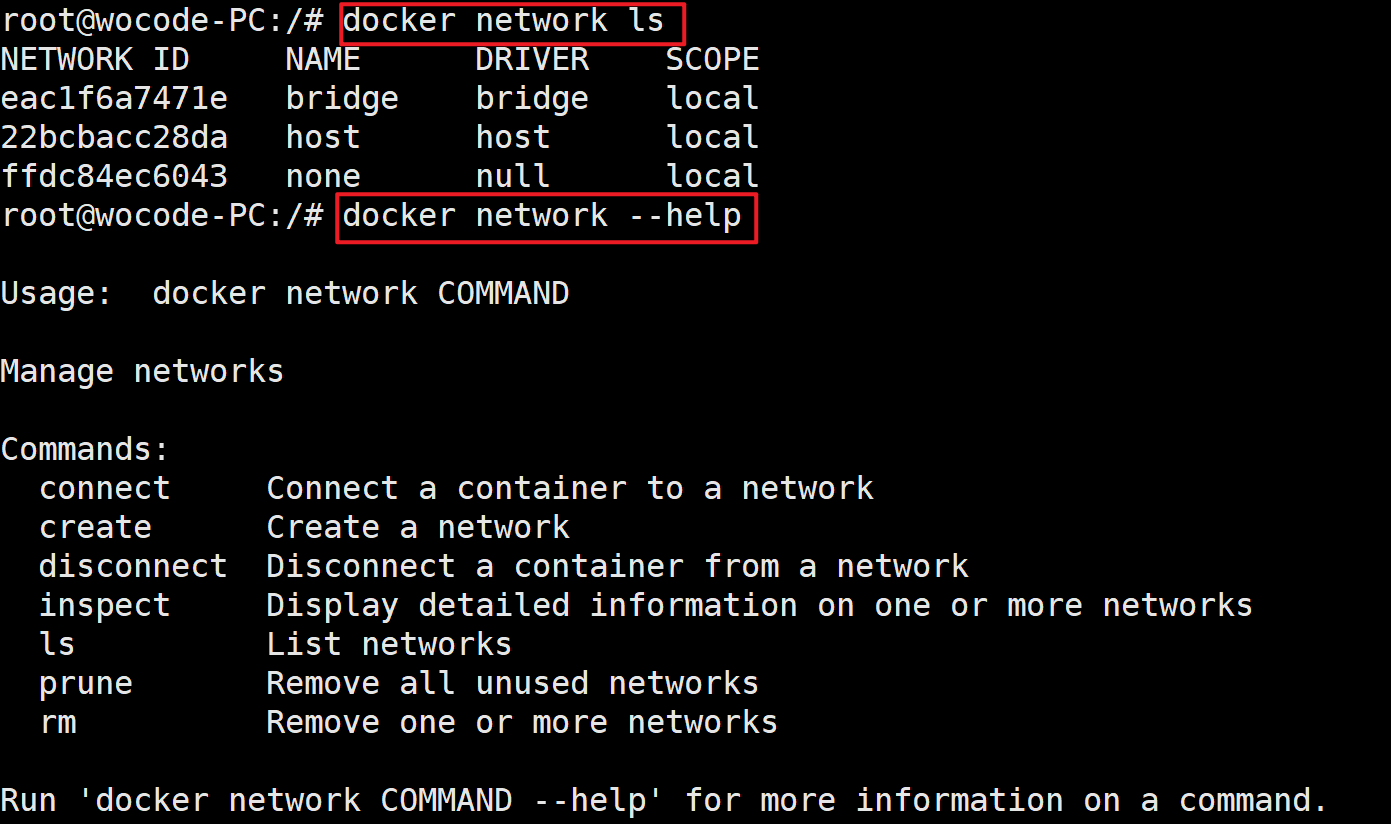

docker network命令可以查看docker的网络信息。![]()

![]()

自定义网络

以上使用的都是默认的bridge网络,即利用默认的docker0虚拟网卡作为路由器以及veth-pair技术将各个容器联通,但有个很大的缺点就是这些容器只能通过ip地址ping通,不可以通过容器名进行Ping通,而–link的方式只是修改了hosts文件,实际上已经不推荐此方式了。而且还会导致所有容器全部放在一个网段当中不易管理。因此这就是需要自定义网络。

下面先介绍四种网络模式。

①Bridge模式:容器使用独立network Namespace。当Docker进程启动时,会在主机上创建一个名为docker0的虚拟网桥,此主机上启动的Docker容器会连接到这个虚拟网桥上。虚拟网桥的工作方式和物理交换机类似,这样主机上的所有容器就通过交换机连在了一个二层网络中。即从docker0子网中分配一个IP给容器使用,并设置docker0的IP地址为容器的默认网关。在主机上创建一对虚拟网卡veth pair设备,Docker将veth pair设备的一端放在新创建的容器中,并命名为eth0(容器的网卡),另一端放在主机中,以vethxxx这样类似的名字命名,并将这个网络设备加入到docker0网桥中。

Bridge模式是docker的默认网络模式,不写–net参数,就是bridge模式。例如:

1

2

3docker run -d -P --name tomcat01 tomcat

# 等价于

docker run -d -P --name tomcat01 --net bridge tomcat

②None模式: Docker容器拥有自己的Network Namespace。但是,并不为Docker容器进行任何网络配置。也就是说,这个Docker容器没有网卡、IP、路由等信息。需要我们自己为Docker容器添加网卡、配置IP等。

③Host模式: 相当于Vmware中的NAT模式,与宿主机在同一个网络中,但没有独立IP地址。

- Docker使用了Linux的Namespaces技术来进行资源隔离,如PID Namespace隔离进程,Mount Namespace隔离文件系统,Network Namespace隔离网络等。

- 一个Network Namespace提供了一份独立的网络环境,包括网卡、路由、Iptable规则等都与其他的Network Namespace隔离。一个Docker容器一般会分配一个独立的Network Namespace。但如果启动容器的时候使用host模式,那么这个容器将不会获得一个独立的Network Namespace,而是和宿主机共用一个Network Namespace。容器将不会虚拟出自己的网卡,配置自己的IP等,而是使用宿主机的IP和端口。

④Container模式:这个模式指定新创建的容器和已经存在的一个容器共享一个Network Namespace,而不是和宿主机共享。新创建的容器不会创建自己的网卡,配置自己的IP,而是和一个指定的容器共享IP、端口范围等。同样,两个容器除了网络方面,其他的如文件系统、进程列表等还是隔离的。两个容器的进程可以通过lo网卡设备通信。

创建自定义网络流程如下:

①创建并查看自定义网络。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39root@wocode-PC:/# docker network create --driver bridge --subnet 192.168.0.0/16 --gateway 192.168.0.1 mynet

a304f4518e69a731ab8ecc2abb44f88a5e174f06ee3dccf763c36c12010efad7

root@wocode-PC:/# docker network ls

NETWORK ID NAME DRIVER SCOPE

a994d511c807 bridge bridge local

22bcbacc28da host host local

a304f4518e69 mynet bridge local

ffdc84ec6043 none null local

root@wocode-PC:/# docker network inspect mynet

[

{

"Name": "mynet",

"Id": "a304f4518e69a731ab8ecc2abb44f88a5e174f06ee3dccf763c36c12010efad7",

"Created": "2021-04-23T14:57:07.782618168+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {},

"Options": {},

"Labels": {}

}

]②启动容器并指定网络为mynet。

1

2

3

4root@wocode-PC:/# docker run -d -P --name tomcat-mynet-01 --net mynet tomcat

2193ed0dcd8ca461495fc708af5edac3df6cfa2f2cea4e1a6fc7dcde2936aeba

root@wocode-PC:/# docker run -d -P --name tomcat-mynet-02 --net mynet tomcat

5fca1664436e19f9e842d080e3c8ae3ee07bac2a945540d16a8e9fcb268b7560此时查看mynet网络会发现其中添加了tomcat-mynet-01和tomcat-mynet-02容器的记录。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46root@wocode-PC:/# docker network inspect mynet

[

{

"Name": "mynet",

"Id": "a304f4518e69a731ab8ecc2abb44f88a5e174f06ee3dccf763c36c12010efad7",

"Created": "2021-04-23T14:57:07.782618168+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"2193ed0dcd8ca461495fc708af5edac3df6cfa2f2cea4e1a6fc7dcde2936aeba": {

"Name": "tomcat-mynet-01",

"EndpointID": "eebdcf020f076ba934d611cc1185615f2cf0aa0e29d410f1612af0e7e06c1a30",

"MacAddress": "02:42:c0:a8:00:02",

"IPv4Address": "192.168.0.2/16",

"IPv6Address": ""

},

"5fca1664436e19f9e842d080e3c8ae3ee07bac2a945540d16a8e9fcb268b7560": {

"Name": "tomcat-mynet-02",

"EndpointID": "e72f716d8854c8915b434929706ac3bc711b6e6c1253a021973f187adb468a0a",

"MacAddress": "02:42:c0:a8:00:03",

"IPv4Address": "192.168.0.3/16",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]③测试容器间通信。发现自定义网络既能使用ip地址也能使用容器名称互相ping通。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20root@wocode-PC:/# docker exec -it tomcat-mynet-01 ping 192.168.0.3

PING 192.168.0.3 (192.168.0.3) 56(84) bytes of data.

64 bytes from 192.168.0.3: icmp_seq=1 ttl=64 time=0.168 ms

64 bytes from 192.168.0.3: icmp_seq=2 ttl=64 time=0.106 ms

64 bytes from 192.168.0.3: icmp_seq=3 ttl=64 time=0.102 ms

64 bytes from 192.168.0.3: icmp_seq=4 ttl=64 time=0.115 ms

^C

--- 192.168.0.3 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 61ms

rtt min/avg/max/mdev = 0.102/0.122/0.168/0.029 ms

root@wocode-PC:/# docker exec -it tomcat-mynet-01 ping tomcat-mynet-02

PING tomcat-mynet-02 (192.168.0.3) 56(84) bytes of data.

64 bytes from tomcat-mynet-02.mynet (192.168.0.3): icmp_seq=1 ttl=64 time=0.061 ms

64 bytes from tomcat-mynet-02.mynet (192.168.0.3): icmp_seq=2 ttl=64 time=0.135 ms

64 bytes from tomcat-mynet-02.mynet (192.168.0.3): icmp_seq=3 ttl=64 time=0.095 ms

64 bytes from tomcat-mynet-02.mynet (192.168.0.3): icmp_seq=4 ttl=64 time=0.087 ms

^C

--- tomcat-mynet-02 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 81ms

rtt min/avg/max/mdev = 0.061/0.094/0.135/0.028 ms

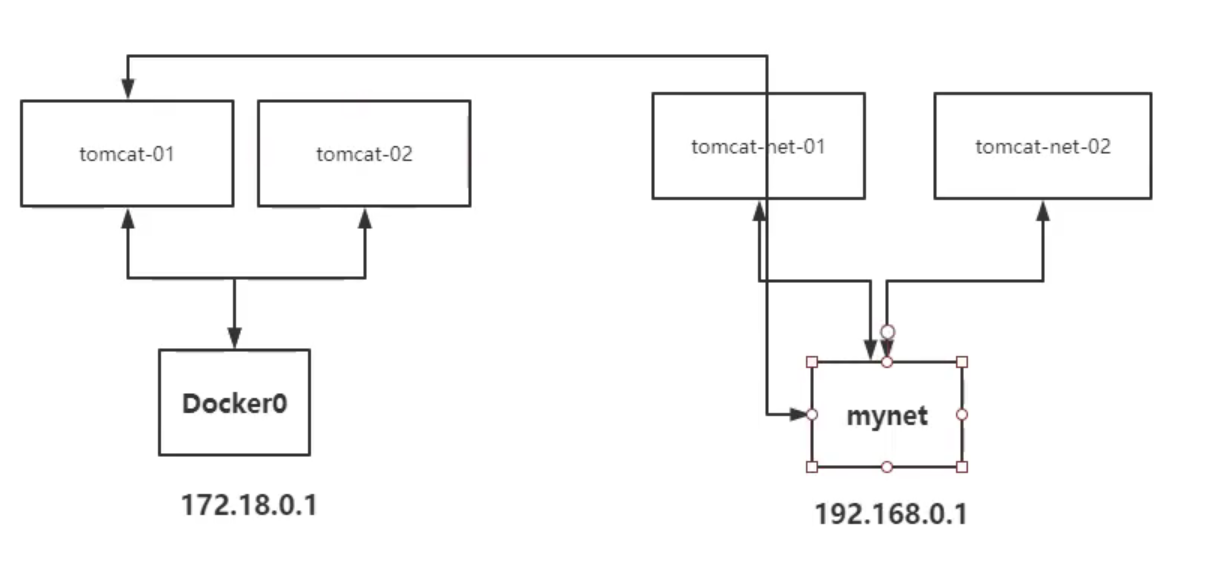

实现不同网络互通

以上是同一个自定义网络的容器之间相互通信,如果想要实现不同网络之间的互通就要用到docker network connect命令。

①在上一步的基础上创建两个默认docker0下的两个容器tomcat01与tomcat02。

1

2

3

4root@wocode-PC:/# docker run -d -P --name tomcat01 tomcat

3caa59e01b771efd925bebe502985d690e97b62503542530c850b69902dc73d4

root@wocode-PC:/# docker run -d -P --name tomcat02 tomcat

1c510aa3ee1ff1b25f9a304ff77c8a42d79d85decec546368c86dd73de5a7d1d②使用

docker network connect命令将两个不同虚拟网络下的容器进行联通操作。即实现如下图。![]()

1

root@wocode-PC:/# docker network connect mynet tomcat01

联通之后使用

docker network inspect查看自定义网络,会发现不同网络的tomcat01容器被添加到mynet当中。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53root@wocode-PC:/# docker network inspect mynet

[

{

"Name": "mynet",

"Id": "a304f4518e69a731ab8ecc2abb44f88a5e174f06ee3dccf763c36c12010efad7",

"Created": "2021-04-23T14:57:07.782618168+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"2193ed0dcd8ca461495fc708af5edac3df6cfa2f2cea4e1a6fc7dcde2936aeba": {

"Name": "tomcat-mynet-01",

"EndpointID": "eebdcf020f076ba934d611cc1185615f2cf0aa0e29d410f1612af0e7e06c1a30",

"MacAddress": "02:42:c0:a8:00:02",

"IPv4Address": "192.168.0.2/16",

"IPv6Address": ""

},

"3caa59e01b771efd925bebe502985d690e97b62503542530c850b69902dc73d4": {

"Name": "tomcat01",

"EndpointID": "3109cce392f4c1fb30a556a65b07627058c297db80df4c54f1c8058c5eb4d792",

"MacAddress": "02:42:c0:a8:00:04",

"IPv4Address": "192.168.0.4/16",

"IPv6Address": ""

},

"5fca1664436e19f9e842d080e3c8ae3ee07bac2a945540d16a8e9fcb268b7560": {

"Name": "tomcat-mynet-02",

"EndpointID": "e72f716d8854c8915b434929706ac3bc711b6e6c1253a021973f187adb468a0a",

"MacAddress": "02:42:c0:a8:00:03",

"IPv4Address": "192.168.0.3/16",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]③测试不同网络的容器也可以ping通。

1

2

3

4

5

6

7

8

9

10root@wocode-PC:/# docker exec -it tomcat01 ping tomcat-mynet-01

PING tomcat-mynet-01 (192.168.0.2) 56(84) bytes of data.

64 bytes from tomcat-mynet-01.mynet (192.168.0.2): icmp_seq=1 ttl=64 time=0.145 ms

64 bytes from tomcat-mynet-01.mynet (192.168.0.2): icmp_seq=2 ttl=64 time=0.110 ms

64 bytes from tomcat-mynet-01.mynet (192.168.0.2): icmp_seq=3 ttl=64 time=0.112 ms

64 bytes from tomcat-mynet-01.mynet (192.168.0.2): icmp_seq=4 ttl=64 time=0.069 ms

^C

--- tomcat-mynet-01 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 75ms

rtt min/avg/max/mdev = 0.069/0.109/0.145/0.026 ms

实战:部署redis集群(三主三从)

①创建redis网卡。

1

2root@wocode-PC:/# docker network create redis --subnet 172.38.0.0/16

791ad1cce08b0184aa04e6ccf1f6b787b9d302539050239df3a1e2b20d7a5845②通过脚本创建6个redis配置。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16for port in $(seq 1 6); \

do \

mkdir -p /mydata/redis/node-${port}/conf

touch /mydata/redis/node-${port}/conf/redis.conf

cat EOF /mydata/redis/node-${port}/conf/redis.conf

port 6379

bind 0.0.0.0

cluster-enabled yes

cluster-config-file nodes.conf

cluster-node-timeout 5000

cluster-announce-ip 172.38.0.1${port}

cluster-announce-port 6379

cluster-announce-bus-port 16379

appendonly yes

EOF

done③分别启动6个redis服务。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44# 第一个redis

docker run -p 6371:6379 -p 16371:16379 --name redis-1 \

-v /mydata/redis/node-1/data:/data \

-v /mydata/redis/node-1/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.11 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

# 第二个redis

docker run -p 6372:6379 -p 16372:16379 --name redis-2 \

-v /mydata/redis/node-2/data:/data \

-v /mydata/redis/node-2/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.12 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

# 第三个redis

docker run -p 6373:6379 -p 16373:16379 --name redis-3 \

-v /mydata/redis/node-3/data:/data \

-v /mydata/redis/node-3/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.13 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

# 第四个redis

docker run -p 6374:6379 -p 16374:16379 --name redis-4 \

-v /mydata/redis/node-4/data:/data \

-v /mydata/redis/node-4/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.14 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

# 第五个redis

docker run -p 6375:6379 -p 16375:16379 --name redis-5 \

-v /mydata/redis/node-5/data:/data \

-v /mydata/redis/node-5/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.15 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

# 第六个redis

docker run -p 6376:6379 -p 16376:16379 --name redis-6 \

-v /mydata/redis/node-6/data:/data \

-v /mydata/redis/node-6/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.16 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

root@wocode-PC:/# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

75a3a783f8f0 redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 10 seconds ago Up 9 seconds 0.0.0.0:6376->6379/tcp, :::6376->6379/tcp, 0.0.0.0:16376->16379/tcp, :::16376->16379/tcp redis-6

4274e29c32b7 redis:5.0.9-alpine3.11 "docker-entrypoint.s…" About a minute ago Up About a minute 0.0.0.0:6375->6379/tcp, :::6375->6379/tcp, 0.0.0.0:16375->16379/tcp, :::16375->16379/tcp redis-5

910a4926da45 redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 2 minutes ago Up 2 minutes 0.0.0.0:6374->6379/tcp, :::6374->6379/tcp, 0.0.0.0:16374->16379/tcp, :::16374->16379/tcp redis-4

d2ef46454523 redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 2 minutes ago Up 2 minutes 0.0.0.0:6373->6379/tcp, :::6373->6379/tcp, 0.0.0.0:16373->16379/tcp, :::16373->16379/tcp redis-3

abcee71e7076 redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 3 minutes ago Up 3 minutes 0.0.0.0:6372->6379/tcp, :::6372->6379/tcp, 0.0.0.0:16372->16379/tcp, :::16372->16379/tcp redis-2

abc9691f2428 redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 4 minutes ago Up 4 minutes 0.0.0.0:6371->6379/tcp, :::6371->6379/tcp, 0.0.0.0:16371->16379/tcp, :::16371->16379/tcp redis-1④创建集群。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53root@wocode-PC:/# docker exec -it redis-1 /bin/sh

/data # ls

appendonly.aof nodes.conf

/data # redis-cli --cluster create 172.38.0.11:6379 172.38.0.12:6379 172.38.0.13:6379 172.38.0.14:6379 172.3

8.0.15:6379 172.38.0.16:6379 --cluster-replicas 1

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 172.38.0.15:6379 to 172.38.0.11:6379

Adding replica 172.38.0.16:6379 to 172.38.0.12:6379

Adding replica 172.38.0.14:6379 to 172.38.0.13:6379

M: f71d98557338d03dc072c1514fda388fbdbe6e93 172.38.0.11:6379

slots:[0-5460] (5461 slots) master

M: a104a1e539c61683c3d218a7d8b93d31aee0de47 172.38.0.12:6379

slots:[5461-10922] (5462 slots) master

M: ffd6595172fc8a4a2d02064f2fec354c948599a4 172.38.0.13:6379

slots:[10923-16383] (5461 slots) master

S: 3f344066c7a7302b8fe07771a6c4146ca1479eb1 172.38.0.14:6379

replicates ffd6595172fc8a4a2d02064f2fec354c948599a4

S: 45e4c5d706e1fd90327ef4556ca31b7705d66b06 172.38.0.15:6379

replicates f71d98557338d03dc072c1514fda388fbdbe6e93

S: b4b32690178cf8626a4f91026e86c9cdb288e857 172.38.0.16:6379

replicates a104a1e539c61683c3d218a7d8b93d31aee0de47

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

....

>>> Performing Cluster Check (using node 172.38.0.11:6379)

M: f71d98557338d03dc072c1514fda388fbdbe6e93 172.38.0.11:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: ffd6595172fc8a4a2d02064f2fec354c948599a4 172.38.0.13:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: b4b32690178cf8626a4f91026e86c9cdb288e857 172.38.0.16:6379

slots: (0 slots) slave

replicates a104a1e539c61683c3d218a7d8b93d31aee0de47

M: a104a1e539c61683c3d218a7d8b93d31aee0de47 172.38.0.12:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: 3f344066c7a7302b8fe07771a6c4146ca1479eb1 172.38.0.14:6379

slots: (0 slots) slave

replicates ffd6595172fc8a4a2d02064f2fec354c948599a4

S: 45e4c5d706e1fd90327ef4556ca31b7705d66b06 172.38.0.15:6379

slots: (0 slots) slave

replicates f71d98557338d03dc072c1514fda388fbdbe6e93

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.⑤测试集群。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25/data # redis-cli -c

127.0.0.1:6379> cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:6

cluster_my_epoch:1

cluster_stats_messages_ping_sent:216

cluster_stats_messages_pong_sent:230

cluster_stats_messages_sent:446

cluster_stats_messages_ping_received:225

cluster_stats_messages_pong_received:216

cluster_stats_messages_meet_received:5

cluster_stats_messages_received:446

127.0.0.1:6379> cluster nodes

ffd6595172fc8a4a2d02064f2fec354c948599a4 172.38.0.13:6379@16379 master - 0 1619165864219 3 connected 10923-16383

b4b32690178cf8626a4f91026e86c9cdb288e857 172.38.0.16:6379@16379 slave a104a1e539c61683c3d218a7d8b93d31aee0de47 0 1619165863508 6 connected

a104a1e539c61683c3d218a7d8b93d31aee0de47 172.38.0.12:6379@16379 master - 0 1619165863000 2 connected 5461-10922

f71d98557338d03dc072c1514fda388fbdbe6e93 172.38.0.11:6379@16379 myself,master - 0 1619165862000 1 connected 0-5460

3f344066c7a7302b8fe07771a6c4146ca1479eb1 172.38.0.14:6379@16379 slave ffd6595172fc8a4a2d02064f2fec354c948599a4 0 1619165864000 4 connected

45e4c5d706e1fd90327ef4556ca31b7705d66b06 172.38.0.15:6379@16379 slave f71d98557338d03dc072c1514fda388fbdbe6e93 0 1619165863202 5 connected