Kubernetes之服务监控

1、Prometheus

1.1 简介

Prometheus是由SoundCloud开发的开源监控报警系统和时序列数据库。Prometheus使用Go语言开发,是Google BorgMon监控系统的开源版本。2016年由Google发起Linux基金会旗下的原生云基金会, 将Prometheus纳入其下第二大开源项目。Prometheus目前在开源社区相当活跃。Prometheus和Heapster(Heapster是K8S的一个子项目,用于获取集群的性能数据)相比功能更完善、更全面。Prometheus性能也足够支撑上万台规模的集群。- 组件说明:

MetricServer:是K8S集群资源使用情况的聚合器,收集数据给K8S集群内使用,如kubectl、hpa、scheduler等。PrometheusOperator:是一个系统监测和警报工具箱,用来存储监控数据。NodeExporter:用于各node的关键度量指标状态数据。KubeStateMetrics:收集K8S集群内资源对象数据,制定告警规则。Prometheus:采用pull方式收集apiserver,scheduler,controller-manager,kubelet组件数据,通过http协议传输。Grafana:是可视化数据统计和监控平台。

1.2 安装

1 | [root@master Prometheus]# git clone https://github.com/coreos/kube-prometheus.git |

访问

[Prometheus Time Series Collection and Processing Server](http://192.168.200.20:30200/graph)即可看到操作界面。![1635081812320]()

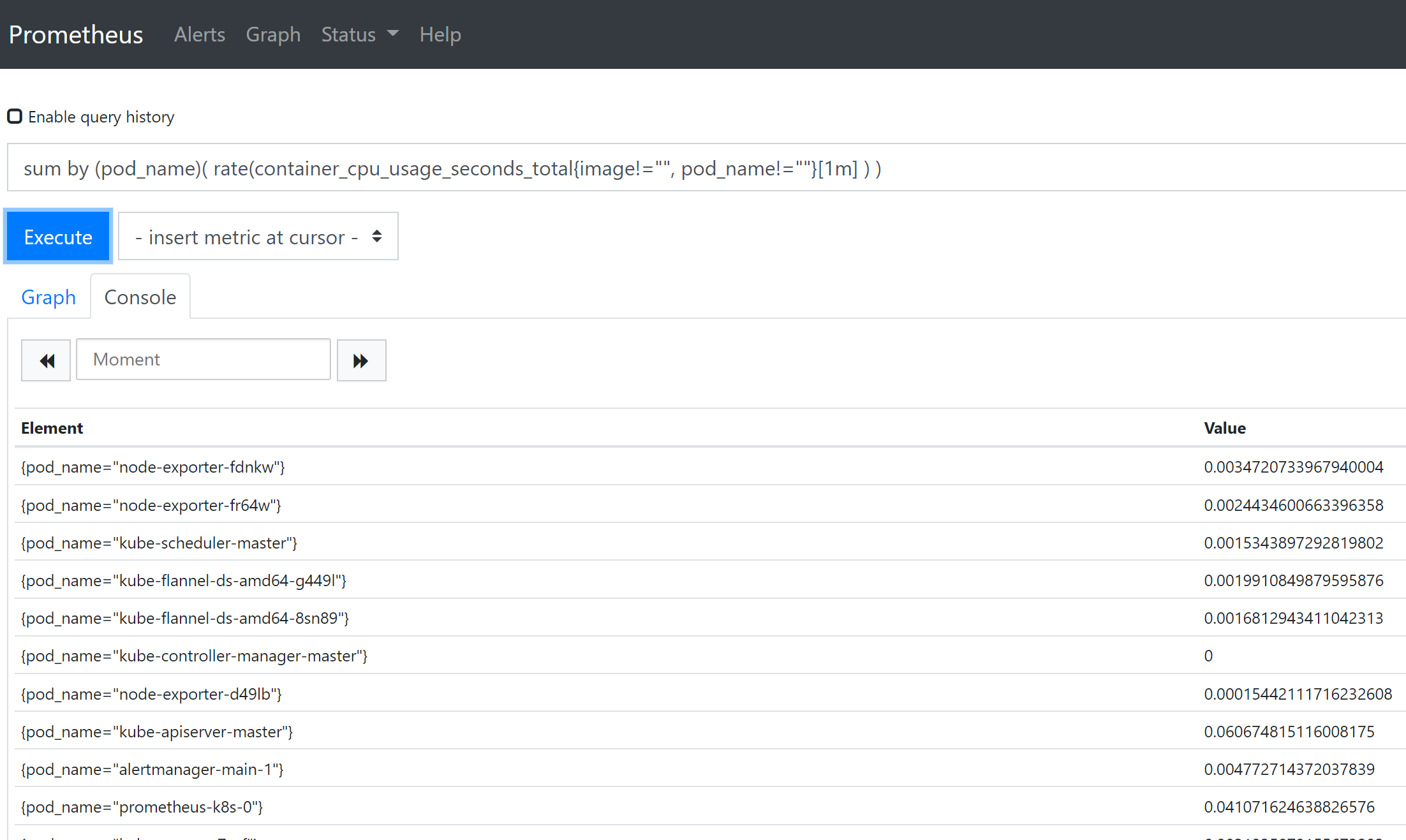

除了页面可点击获取的信息外,

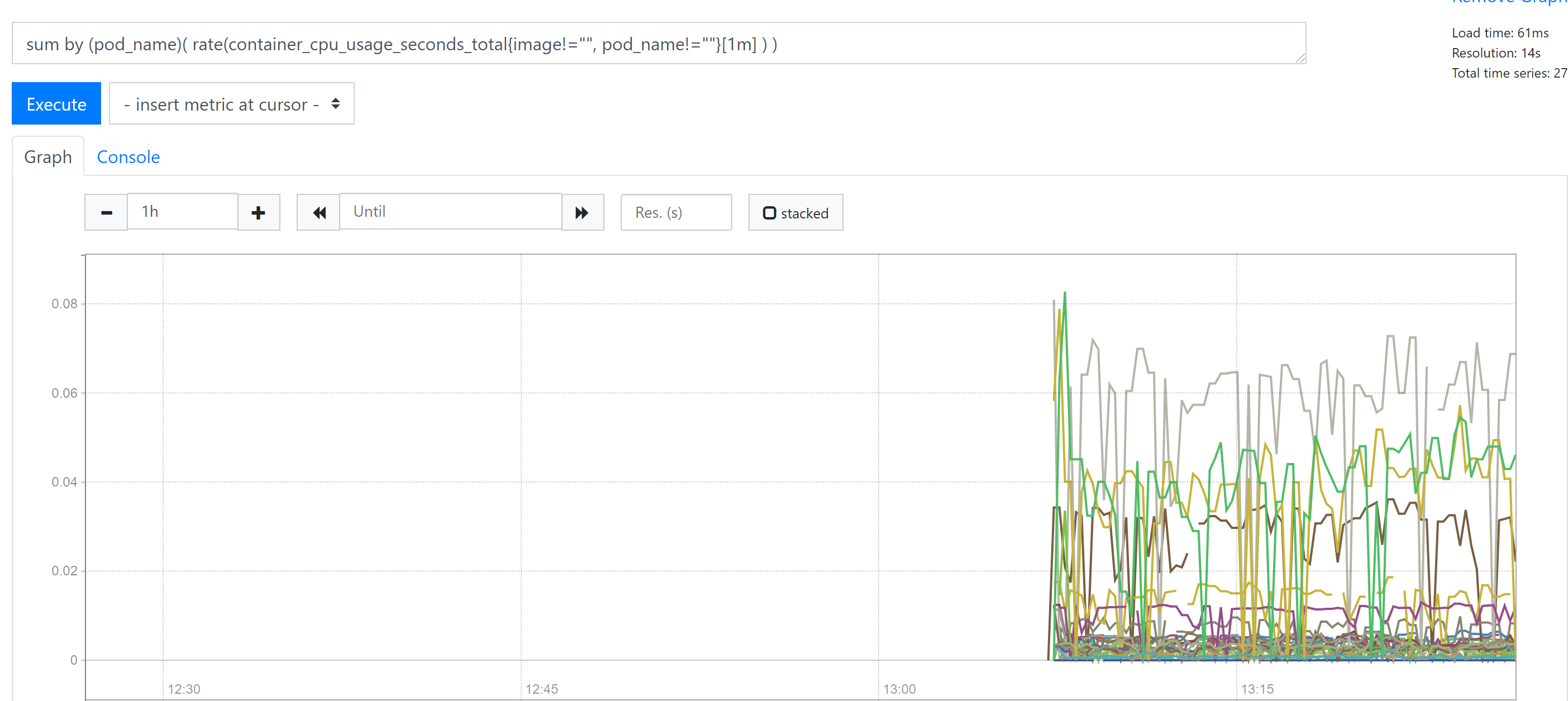

prometheus的WEB界面上提供了基本的查询K8S集群中每个Pod的CPU使用情况,查询条件如下:1

sum by (pod_name)( rate(container_cpu_usage_seconds_total{image!="", pod_name!=""}[1m] ) )

![]()

![]()

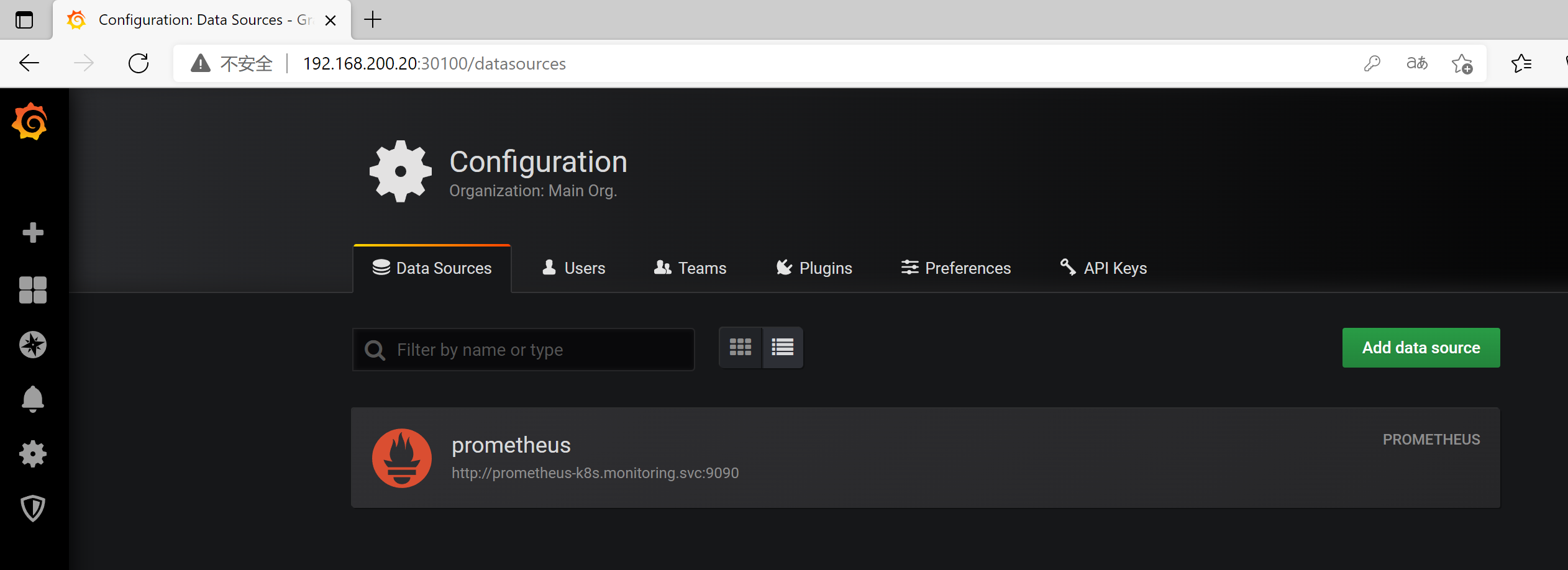

访问

grafana:[Grafana](http://192.168.200.20:30100/login),接着使用初始账号密码为admin登录即可。![]()

![]()

2、Horizontal Pod Autoscaling

Horizontal Pod Autoscaling可以根据CPU利用率自动伸缩一个Replication Controller、Deployment或者Replica Set中的Pod数量。①在所有节点安装HPA镜像。

1

2

3

4

5

6[root@master ~]# docker load -i hpa-example.tar

[root@node1 ~]# docker load -i hpa-example.tar

[root@node2 ~]# docker load -i hpa-example.tar

[root@node1 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

gcr.io/google_containers/hpa-example latest 4ca4c13a6d7c 6 years ago 481MB②创建名为php-apache的pod。

1

2

3

4

5

6

7

8

9

10

11

12

13[root@master ~]# kubectl run php-apache --image=gcr.io/google_containers/hpa-example --requests=cpu=200m --expose --port=80

kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.

service/php-apache created

deployment.apps/php-apache created

# 将deployment的镜像拉取策略改为Never,即imagePullPolicy: Never

[root@master ~]# kubectl edit deployment php-apache

deployment.extensions/php-apache edited

[root@master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

php-apache-f44dcdb46-lp2q2 1/1 Running 0 67s

[root@master ~]# kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

php-apache 1/1 1 1 3m49s③创建HPA控制器。

1

2

3

4

5

6# 设置当cpu的利用率大于50%时会创建新pod,但最多不超过10个pod,而当cpu利用率小于%50时又会重新减少pod节点数,但最小不小于1个pod。

[root@master ~]# kubectl autoscale deployment php-apache --cpu-percent=50 --min=1 --max=10

horizontalpodautoscaler.autoscaling/php-apache autoscaled

[root@master ~]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

php-apache Deployment/php-apache 0%/50% 1 10 1 37s④增加负载,查看负载节点数目。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20[root@master ~]# kubectl run -i --tty load-generator --image=busybox /bin/sh

kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.

If you don't see a command prompt, try pressing enter.

/ # while true; do wget -q -O- http://php-apache.default.svc.cluster.local; done

[root@master ~]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

php-apache Deployment/php-apache 172%/50% 1 10 4 3m10s

[root@master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

load-generator-7d549cd44-k7qt7 1/1 Running 0 2m1s

php-apache-f44dcdb46-4bff5 1/1 Running 0 19s

php-apache-f44dcdb46-58xxk 1/1 Running 0 49s

php-apache-f44dcdb46-j7lb5 1/1 Running 0 49s

php-apache-f44dcdb46-lp2q2 1/1 Running 0 7m18s

php-apache-f44dcdb46-tkgls 1/1 Running 0 49s

php-apache-f44dcdb46-wp6pz 1/1 Running 0 19s

[root@master ~]# kubectl top pod

NAME CPU(cores) MEMORY(bytes)

php-apache-f44dcdb46-58xxk 198m 14Mi

php-apache-f44dcdb46-lp2q2 204m 31Mi

3、资源限制

3.1 Pod

Kubernetes对资源的限制实际上是通过cgroup来控制的,cgroup是容器的一组用来控制内核如何运行进程的相关属性集合。针对内存、CPU和各种设备都有对应的cgroup。默认情况下,

Pod运行没有CPU和内存的限额。这意味着系统中的任何Pod将能够像执行该Pod所在的节点一样,消耗足够多的CPU和内存。一般会针对某些应用的pod资源进行资源限制,这个资源限制是通过resources的requests(初始为pod分配的资源)和limits(pod能使用的最大资源数)来实现。1

2

3

4

5

6

7

8

9

10

11

12spec:

containers:

- image: xxxx

imagePullPolicy: Always

name: auth

resources:

limits:

cpu: "4"

memory: 2Gi

requests:

cpu: 250m

memory: 250Mi

3.2 名称空间

计算资源配额:

1

2

3

4

5

6

7

8

9

10

11apiVersion: v1

kind: ResourceQuota

metadata:

name: compute-resources

spec:

hard:

pods: "20"

requests.cpu: "20"

requests.memory: 100Gi

limits.cpu: "40"

limits.memory: 200Gi配置对象数量配额限制:

1

2

3

4

5

6

7

8

9

10

11

12apiVersion: v1

kind: ResourceQuota

metadata:

name: object-counts

spec:

hard:

configmaps: "10"

persistentvolumeclaims: "4"

replicationcontrollers: "20"

secrets: "10"

services: "10"

services.loadbalancers: "2"配置CPU和内存LimitRange(避免OOM):

1

2

3

4

5

6

7

8

9

10

11

12

13apiVersion: v1

kind: LimitRange

metadata:

name: mem-limit-range

spec:

limits:

- default: # 即limit的值

memory: 50Gi

cpu: 5

defaultRequest: # 即request的值

memory: 1Gi

cpu: 1

type: Container